DirectX 11 class graphics card performance

DirectX 11 class graphics card performance

With the poor performance in mind we started to analyze. I dropped the idea of VGA performance overview testing dozens of cards at this time and wanted move forward with solely a GeForce GTX 780 Ti, on which we'll fire off all kinds of image quality settings to see what the heck is the cause of that bad performance and stuttering.

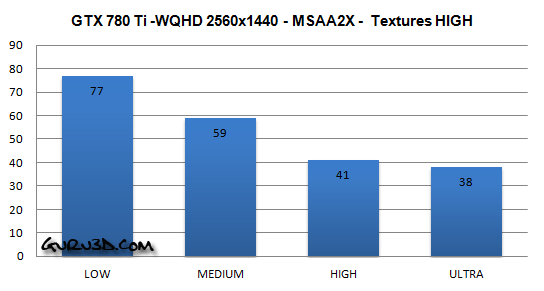

We locked the resolutions at the most preferred gaming resolution for high-end / enthusiast class gamers, 2560x1440 (WQHD). We then lowered texture quality settings from the Ultra setting (recommended for 3GB graphics cards) towards HIGH (recommended for 2GB graphics cards) to give this card a little more Video memory to fiddle and fool around in. Above the results with merely 2x MSAA enabled versus the four image quality modes that are available in-game.

So high quality textures (pretty normal) versus High Image Quality settings is roughly the max for a GeForce GTX 780 Ti in 2560x1440, wowzers, that is a 700 USD in a PC not working/performing properly with Watch Dogs's Ultra quality settings. I find that baffling really. Even at high image quality setting you will still get in-game stutters if you get your AA settings wrong (more on that later though).

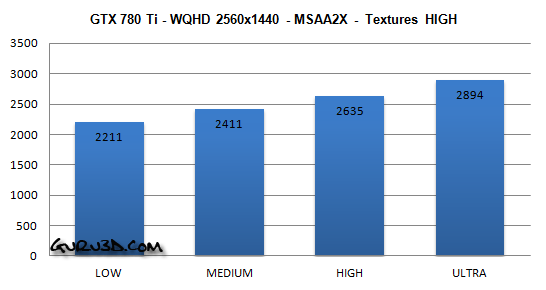

Now with the texture quality set at High (2GB mode) combined with image quality settings modes at high image quality you are already passing 2500 MB of used graphics memory. This is at merely 2xMSAA. So a any graphics card with 2GB of memory set at HIGH/HIGH @ 2560x1440, would break down as frames start so swap back and forth in the frame-buffer. With such a card you'd need to be at Full HD / 1920x1080 maximum.

But let's breakdown things even further, next page please.