Enabling HDR for Windows - Gaming and Video

Enabling HDR for Windows - Gaming, and Video

As stated, I've been working with this monitor for a while now and, on this page, I wanted to share some of my experiences in the form of a guide. Enabling HDR is not something that happens by default. Luckily, some advancements have been made over the past few months in Windows 10. Basically, HDR needs to be switched on/off per game, OS and even for movie/media playback. We'll divide this page into these three segments.

Windows 10 Desktop HDR mode

Probably once you have an HDR screen, you'll get all trigger happy about getting the Windows 10 desktop to run in HDR mode. My advice, leave HDR for Windows desktop mode disabled. The benefits are slim really unless you need it for a software application working in a wider color space. Enabling HDR in desktop mode in my experience creates more errors and anomalies than the pleasure of having HDR. For example, lots of applications cannot deal with the 10-bit color space, heck, even Google Chrome messes up. However, if you want to try it out, simply type HDR in search or Cortana, and go to its settings. BTW, while the 2017 Fall update added HDR support, Windows 10 Spring update 2018 (1803 or higher) is mandatory here as far as I am concerned as Microsoft made significant improvements in the current codebase to get HDR better supported.

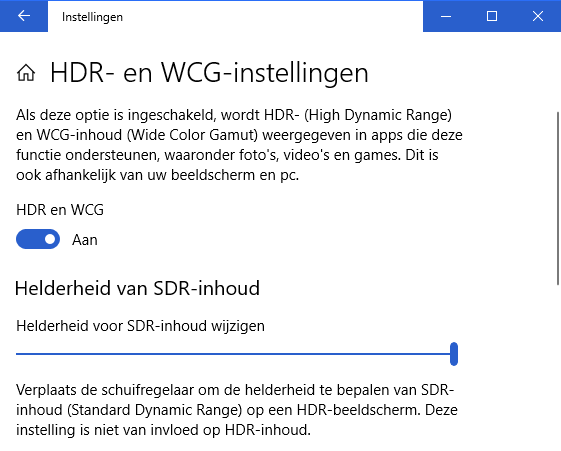

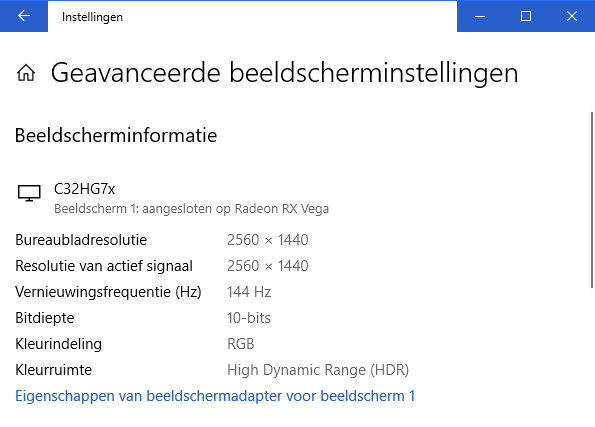

Pardon the Dutch lingo, but you simply enable HDR and WCG (wide color gamut), after which your Desktop now runs in 10-bit. In advanced display properties, you can actually check the status. You would now probably like to use that SDR slider and move it all the way to the right.

And yes, we can verify that Windows is now running in 10-bit HDR, unfortunately it is not indicating what color space it actually is using e.g. DCI-P3 and/or, say, Rec.2020, and I could not find a way to verify it. But, hey, look again, you're learning some Dutch today man! So yes, switching Windows is an easy thing to do, but the Windows interface itself, as well as all software that you use in Windows, is simply not made for HDR and herein you'll find lots of problems as it is using the conventional (sRGB) brightness and color space. Basically, you'll see a lot of applications varying from dark and gray to dark color tones. My advice: only switch to HDR if you are actually going to use software that produces HDR images.

HDR video on the PC

A secondary use for HDR would be, of course, watching videos, series, and movies on the PC. I'll be blunt and short here, it's a friggin mess. Whether that is Ultra HD Blu-rays, Amazon, Netflix or YouTube HDR. Wanna watch a UHD Blu-ray with HDR on the PC? Not possible. The one ultra HD Blu-ray drive that handles it would be the Pioneer BDR-S11J, which is hardly available anywhere. Then even if you got your hands on such an optical drive, you need a processor with Intel Software Guard Extensions (SGX) support, which means you need a Skylake generation (or newer) processor. SGX needs to be implemented and activated in the UEFI BIOS, we doubt motherboard manufacturers even know what it is, ergo I think it's mostly not enabled at a firmware level. Then the next clusterfrack, you're gonna need a GPU that supports both HDCP 2.2 and AACS 2.0. Advanced Access Content System 2.0 is something both Nvidia and AMD GPUs do not offer just yet at driver level (at least the last I heard of it). But we're not there yet, you need UHD Blu-ray compatible playback software; CyberLink PowerDVD 17, but guess what? This software requires an Intel Core processor starting at Kaby Lake and you need to run it using the integrated GPU. Myeah, you'd be better off connecting a standalone UHD HDR Bluray player directly to the screen. Streaming then... Amazon Prime, NetFlix and even Youtube on Windows we could not get supported HDR wise. The latest drivers all should offer at least Netflix HDR support, I could not get it working. So we know that nobody downloads anything, however, should you somehow end up with an HDR video file, then you should know that most video playback software on Windows does not (yet) support HDR correctly, we noticed SDR mostly.

Windows 10 gaming in HDR mode

We now finally get to the good stuff, as to what it is all about! For gaming in HDR, for most games you do not need that Windows 10 Desktop mode to be set to 10-bit HDR, some however require this (COD WW II / Shadow of Mordor). For titles like SW Battlefront 2, Battlefield 1, Far Cry 5 and F1 2017, this is simply selected in-game (by games that support it). Once the game switches full-screen and is activated for HDR, you're good to go. The big problem, of course, is to find games that actually support HDR, that number is limited yet growing alongside the adoption rate of HDR screens. Some recent titles that support HDR:

- Formula 1 2017

- Final Fantasy XV

- Battlefield 1

- Destiny 2

- Mass Effect: Andromeda

- Shadow Warrior 2

- Resident Evil 7

- Star Wars: Battlefront 2

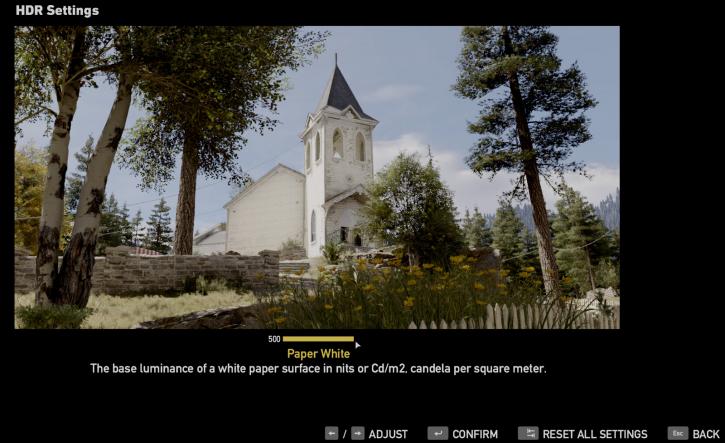

As an example I took Far Cry 5, let's walk through the game settings.

Regardless of your Windows 10 preference of HDR enablement (or not), HDR will work once you select it in game. For FC5 simply go to your display options, all the way down below you can see the 'Enable HDR' setting. Enable it and get a pair of sunglasses ;)

Once enabled, obviously confirm your new setting. Small tip, in the same menu you have brightness options after you've enabled HDR, a new HDR setting becomes visible, here you can see the number of nits. Far Cry 5 at its highest setting will apply 500 nits (Cd/m2) on bright white. Maxing that slider out, you're gonna notice, is a bit much.

Now here we get to the part that is troublesome for any reviewer. We cannot really show HDR in photos or videos. In Far Cry 5, you'll immediately notice the difference. Lots of retina searing brightness kicks in and, in my experience, that's not always better on the eyes. It's quite a lot and I found myself lowering that Cd/m2 value towards 350 nits really fast.

Subjective Experiences

Again, we cannot photograph or show HDR. The effect of HDR is a color gamut you cannot display in a photo on your 8-bit monitor. You really need to experience HDR in a real-world situation to understand it. It's not just brightness, but deeper and better dynamic colors as well; the BT.2020 color space or the DCI-P3 color space from the movie industry, for example, can display colors that do not exist in nature (real-world). Everything is much more visual on the eyes. Predominantly I am positive about HDR, but that doesn't fly for everybody. Mass Effect: Andromeda offers spectacular light effects in HDR. Battlefield 1 was looking really nice but the output felt a bit dimmed, F1 2017 offered better contrast but was a little toned down in colors. Destiny 2 really pops out with a rich and saturated, but not too saturated, color depth, really amazing to see. In the end, it remains a puzzle how color spaces are dealt with per game, we think some games will fit BT.709 colors into BT.2020, some monitors will interpret BT.2020 to BT.709, resulting in a smaller color range. That said, HDR can also be a little too much on the eyes and things will need to develop for some time to come. But when done and dealt with properly, it's impressive, that's my subjective message I am trying to get across.