2 - Team AMD in da house - The Architecture

Team AMD in da house

Hey, do you remember early last year when NVIDIA dominated the mainstream market with a relatively poor performing GeForce 8600 GT ? Once AMD released the series 3000 Radeon products I was pleased. See, they moved onwards to a 256-bit memory bus in the midrange segment and presented the Radeon HD 3850 & 3870 initially as the leading mainstream products. The 3000 series just butchered the GeForce 8600 GT in both price and performance. It literally took NVIDIA a while to properly react to that, and initially we noticed price drops in the 8600 range, then the 8800 GT drastically dropped in price to counteract the Radeon 3000 series and finally the competing product as we still see it today was released, the GeForce 9600 GT. Over the past six months or so the best bang for buck performing products actually were just that, the GeForce 9600 GT, the Radeon HD 3850 and of course the Radeon HD 3870.

These three products are still showing really nice gaming performance in the mid-range segment, until the series 4800 that is. Though not touching the niche high-range market, AMD is doubling up that performance for you at nearly the same price. Quite an achievement. With that little intro let's talk a little about the GPU and the differences between the two models released today.

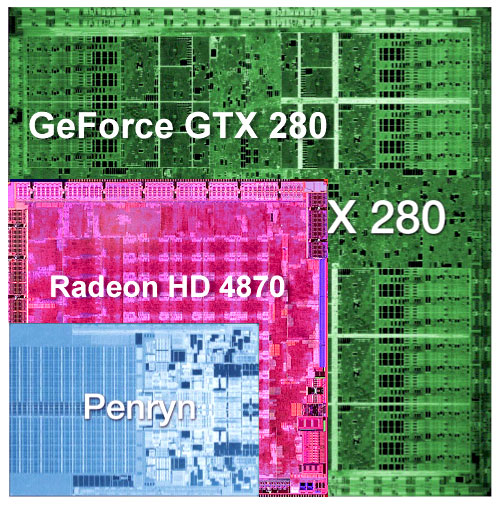

So I spilled the beans already a little on the FrontPage. Today AMD brings you products, actually based on the (codename) RV770 based chipset. They put nearly a billion transistors into that GPU, which is now built upon a 55-nm (260 mm2 Die size) production. The chip literally is 16 mm wide and high. Which for AMD still is quite large, for a 55nm product. The number of transistors for a midrange product like this is extreme and typically it's best to directly relate that to the number of shader processors to get a better understanding. But first let's look at some nice examples of Die sizes of current architectures.

I'm pretty convinced NVIDIA will soon be moving towards 55nm on the GTX 200 series as well.

Now please understand that ATI uses a different architecture shader processors opposed to NVIDIA, so do not compare the numbers that way; in that manner. The new Radeon 4850/4870 have 800 scalar processors (320 on the HD 3800 series) and now have a significant forty texture units (was 16 in last-gen architecture).

The stream/compute/shader processors (can we please just name them all shader processors?) definitely had a good number of changes; if you are into this geek talk, you'll spot 10 SIMD clusters each carrying 80 32-bit Shader processors (this accumulates to 800 ). If I remember correctly, one SIMD unit can handle double precision.

Much like we recently noticed in NVIDIA GTX 200 architecture, the 80 scalar stream processors per unit per SIMD unit have 16KB of local data cache/buffer that is shared among the shader processors. Next to the hefty shader processor increase you probably already notice the massive amount of texture units. In the last generation product we noticed 16 units, the 4800 series has 40 unit.

When you do some quick math, that's 2.5x the number of shader processors over the last-gen product, and 2.5x the number of texture units. That's ... a pretty grand change folks. Since the GPU has 800 shader processors it can produce the raw power of 1000 to 1200 GFlops in simple precision. It's a bit lame and inaccurate to do but ... divide the number of ATI's scalar shader processors with the number 5 and you'll roughly equal the performance to NVIDIA's stream processor, you could (in an abstract way) say that the 4800 series have 160 Shader units, if that helps you compare it towards NVIDIA's scaling. Again there's nothing scientific or objective about that explanation.

Effectively combined with the clock speed and memory this product can poop out 1000/1200 GigaFLOPs of performance. Depending on how that is measured of course. But still, with an entry product at 199 USD for the 4850 and 299 USD for the 4870 that's just an awful lot of computing power. Let's compile a chart:

|

ATI Radeon |

ATI Radeon |

ATI Radeon HD 3850 |

ATI Radeon HD 3870 | |

| # of transitors |

965 million |

965 million |

666 million | 666 million |

|

Stream Processing Units |

800 |

800 |

320 | 320 |

|

Clock speed |

625 MHz |

750 MHz |

670 MHz | 775+ MHz |

|

Memory Clock |

2000 MHz GDDR3 (effective) |

3600 MHz GDDR5 (effective) |

1.66 GHz GDDR3 (effective) | 2.25 GHz GDDR3 (effective) |

|

Math processing rate (Multiply Add) |

1000 GigaFLOPS |

1200 GigaFLOPS |

428 GigaFLOPS | 497+ GigaFLOPS |

|

Texture Units |

40 |

40 |

16 | 16 |

|

Render back-ends |

16 |

16 |

16 | 16 |

|

Memory |

512MB GDDR3 |

512MB GDDR5 |

512MB GDDR3 | 512MB GDDR3/4 |

|

Memory interface |

256-bit |

256-bit |

256-bit | 256-bit |

|

Fabrication process |

55nm |

55nm |

55nm | 55nm |

|

Power Consumption (peak) |

~110W |

~160W |

~90W | ~105W |

When you look at the effective memory bandwidth of the 4870 you at the very least must go "wow".

That memory bandwidth perfection is due to the Radeon HD 4870 using GDDR5 memory instead of GDDR3, obviously. Though the real clock frequency is 900 MHz, the outcome in effective bandwidth is the sustained data rate x4. See, the memory frequency is double that of double data rate. This will give the 4870 an astounding 115.2GB/s at 3600 MHz, while still being on the 256-bit memory bus. That in fact is more memory bandwidth than the GeForce GTX 260 (111.9 GB/s) which has a much wider 448-bit memory bus, but uses GDDR3.

Next to internal efficiency improvements we also stumble into an updated UVD engine (HD video decoder/enhancer) which we'll talk about in a second.

GPU Computing -- Much like NVIDIA just announced with the help of CUDA, ATI (AMD) recently announced cooperation with Intel's HAVOC engine. Though currently far less substantial, PhysX calculations over the GPU are in the work. As it works right now (example debris/cloth) physics calculations are computed over the CPU with games that support the HAVOK API. AMD is working on moving these functions to the GPU. Thus have the stream processors (shader engine) compute these functions.

It's work in progress and during a recent press-briefing we asked when we can expect driver support for GPU HAVOK physics. The answer was unfortunately a bit cold. It could be a matter of two months, yet also easily be the end of the year. Fact remains though that the Series 4000 do support the feature and AMD's driver team is working on it.