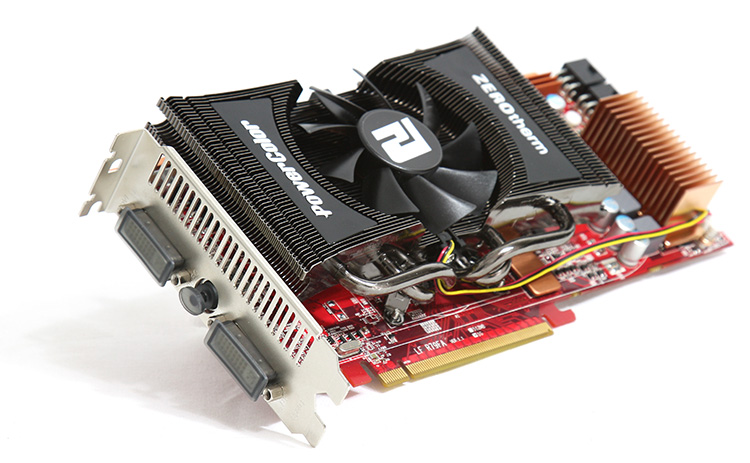

Radeon series 4890 RV790 ASIC

AMD's Radeon series 4890 RV790 ASIC

As you guys know, ATI's Radeon HD 4850/4870 are both using the same GPU (graphics processor). The codename for these graphics cards (ASIC) is RV770. The Radeon HD 4890 used in today's tested product uses the newer RV790 GPU. Honestly, in most aspects it almost the exact same GPU, yet a better yielded version which can take a slightly higher voltage and clock much higher.

The chip that powers the ATI Radeon HD 4890 is, however, a new one, with changes to the physical design to facilitate higher clock speeds. This is why the chip has the new designation (RV790).

AMD put nearly a billion transistors into the GPU, it is still built upon a 55nm (260 mm2 Die size) production, and not 40nm as some of you still feel is the case. The chip is 16 mm wide and high. Actually quite large, for a 55nm product.

Being based off that RV770 graphics processor the Radeon 4890 series graphics processors also have 800 scalar processors and forty texture units (16 in last-gen architecture). The stream/compute/shader processors (can we please just name them all shader processors?) definitely had a good number of changes; if you are into this geek talk, you'll spot 10 SIMD clusters each carrying 80 32-bit Shader processors (this accumulates to 800). If I remember correctly, one SIMD unit can handle double precision.

Much like the NVIDIA GTX 200 architecture, the scalar stream processors per SIMD unit have 16KB of local data cache/buffer that is shared among the shader processors. Next to the hefty shader processor increase you probably already notice the massive amount of texture units. In the last generation product we noticed 16 units, the 4800 series has 40 units.

It's a bit inaccurate to do but divide the number of ATI's scalar shader processors with the number 5 and you'll roughly equal the performance to NVIDIA's stream processor. You could (in an abstract way) say that the 4800 series have 160 shader units, if that helps you compare it towards NVIDIA's scaling. Again there's nothing scientific or objective about that explanation.

Anyhow. With 800 shader processors combined with some new higher clock frequencies it can produce the raw power of 1360 GigaFLOPs in simple precision. Well, depending on how that is measured of course.

Let's make a comparison chart for you to understand the differences between the Radeon HD 4870 and 4890:

|

|

ATI Radeon |

ATI Radeon |

ATI Radeon |

|

# of transistors |

956 million |

956 million |

959 million |

|

Stream Processing Units |

800 |

800 |

800 |

|

Clock speed |

625 MHz |

750 MHz |

850 (and higher) |

|

Memory Clock |

1980 MHz (effective) |

3600 MHz (effective) |

3900 MHz (effective) |

|

Math processing rate (Multiply Add) |

1000 GigaFLOPS |

1200 GigaFLOPS |

1360 GigaFLOPS |

|

Texture Units |

40 |

40 |

40 |

|

Render back-ends |

16 |

16 |

16 |

|

Memory & type |

512MB GDDR3 |

512/1024MB GDDR5 |

1024MB GDDR5 |

|

Memory Bandwith |

64 GB/s |

115 GB/s |

125 GB/s |

|

Memory interface |

256-bit |

256-bit |

256-bit |

|

Fabrication process |

55nm |

55nm |

55nm |

|

Power Consumption (peak) |

110W |

160W |

190W |

|

Power Consumption (Idle) |

30W |

90W |

60W |

* Above the reference specification - not yet the PowerColor PCS+ specifications

So comparing apples to apples, the Radeon HD 4890 simply put is a faster clocked product, a graphics update.