Three primary architectural blocks

Three primary architectural blocks

To understand the Turing architecture a little better, we need to look at the GPU as something holding three architectural blocks. The traditional shader engine you know from last-gen GPUs like Pascal, the Tensor cores you know from Volta and the new RT cores for the hardware acceleration of DXR (DirectX Raytracing). The symbiosis of the three types of processors form what is called the Turing architecture.

Shader processors

We'll go a little more in-depth on the shader engine on the next pages, but the first and foremost important block is the traditional shader engine that we all know of. A full Turing TU102 graphics processor houses 4608 shader processors. For the GeForce RTX 2080 Ti that is 4352 cores activated, for the RTX 2080 that is 2944 cores. We will not be surprised to see a GeForce RTX Titan down the line of course, with the full 4608 shader processors activated.

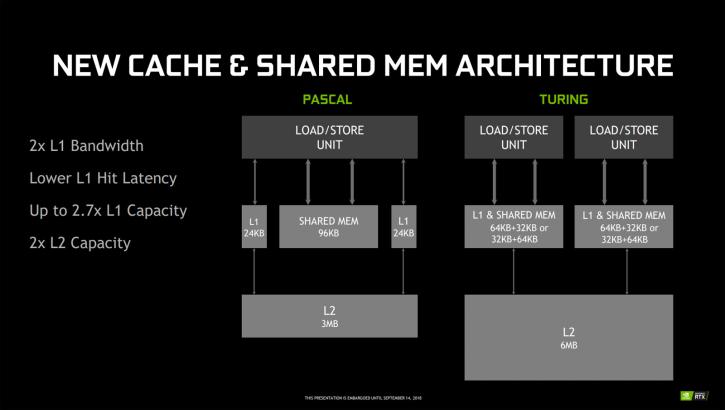

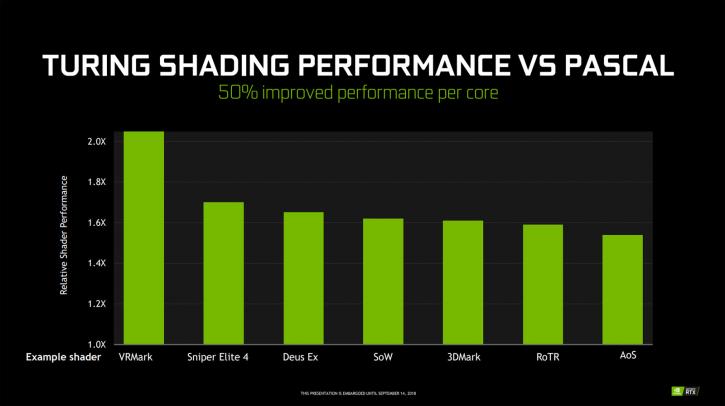

A fully enabled Turing processor will have 96 ROPs, 288 Texture units and offers a 384-bit memory bus. The engine has been overhauled and offers a new superscalar architecture offering concurrent FP & INT, execution datapaths, Enhanced L1 caches. Caches wise there is a dual L1 cache and shared cache L2 6MB. The L1 bandwidth has been doubled compared to Pascal making it more efficient and thus faster. NVIDIA mentions that the shading performance for Turing can be up to 50% faster compared to Pascal.

Tensor processors

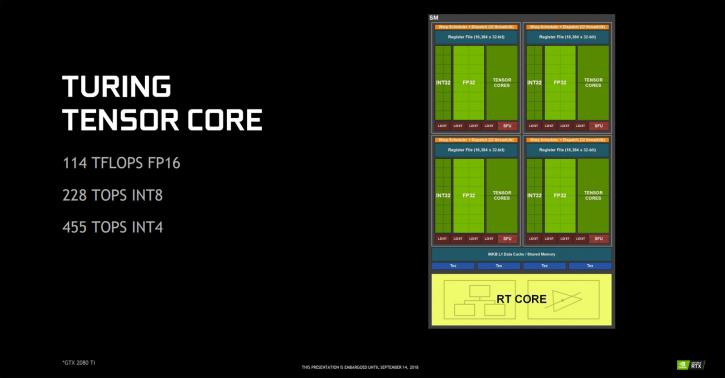

You've seen these introduced in the Volta architecture. Tensor cores are optimized processing blocks that serve a very specific function, they are exceptionally good with anything AI and Deep learning. And on the biggest Turing processor, NVIDIA adds 576 Tensor cores. What nobody really expected though was that these would be enabled for the consumer products. So why would NVIDIA inject tensor cores in a consumer GPU? Actually, it is quite simple, Tensor cores can solve substandard problems and challenges that normally take ages to math. You could use the Tensor cores to have enemy troops in a game driven by far more intelligent AI, imagine clever NPCs learning what you are doing and react to that. As an example, TU102 GPU contains 576 Tensor cores: eight per SM and two per each processing block within an SM. Each Tensor Core can perform up to 64 floating point fused multiply-add (FMA) operations per clock using FP16 inputs. Eight Tensor Cores in an SM perform a total of 512 FP16 multiply and accumulate operations per clock, or 1024 total FP operations per clock. The new INT8 precision mode works at double this rate, or 2048 integer operations per clock.

You could also use Tensor cores for things like anti-cheat detection, or material enhancement. However, I'll keep things simple and relative towards gaming though as NVIDIA added a function called DLSS. And that is the primary use and intention for the Tensor cores at the time of writing. We'll talk about its biggest primary (initial) feature 'Deep Learning Super-Sampling' on the following pages though.

Raytracing (RT) processors

It has been a dream to bring ray tracing to gaming for NVIDIA and as they mentioned, developers also have that same wish. Roughly ten years ago NVIDIA started first working on solving the Ray Tracing challenge. As you guys know, Microsoft announced DXR a while ago, short for DirectX Raytracing. Over the DXR API developers can utilize advanced functions that are built with actual raytracing. Nvidia's new video cards can accelerate that API with the RT cores and its RTX technology. DXR is a step forward towards broader use of ray tracing and in the end, better, more real in-game image quality. Building the capability into DirectX will enable a wider swath of developers to experiment with a technology previously the purview of high-end content creation applications. Companies including Epic, Remedy, and Electronic Arts have already begun experimenting with adding real-time raytracing capabilities to their game engines and with the launch of Windows 10 Fall Edition (2018), DXR will be included into the distribution.

So what is raytracing really? Well, with raytracing, you basically are mimicking the behavior, looks, and feel of a real-life environment in a computer generated 3D scene. Wood looks like wood, however, the leaking resin will shine and refract it's environment and lighting accurately. Fur on animals looks like actual fur, and things like glass and waves of water get refracted as glass based on the surroundings and lights/rays.

Can true 100% Ray tracing be applied in games? Short answer, no, partially. As you have just read and hopefully remember, Microsoft has released an extension towards DirectX; DirectX Raytracing (DXR). Nvidia, on their end, announced RTX (the accumulation of hardware and software Raytracing). The hardware and software combined that can make use of the DirectX Raytracing API. NVIDIA has dedicated hardware inbuilt into their GPUs to accelerate certain Ray tracing features. In the first wave of launches, you are going to see a dozen or so games adding support for the new RTX enabled technology. Truth has to be said, in games, you can really notice the difference. How much of a performance effect RTX will have on games, is something we'll need to look deep into as well. But that is going to take time as not even Microsoft has released Windows 10 with the DX-R API.

So while rasterization has been the default renderer for a long time now, we can now add to that the ability to trace a ray using the same algorithm for complex and deep soft shadows, reflections and refractions. Combining rasterization and ray tracing was the way for NVIDIA to move forward where they are today. The raster and compute phases are what NVIDIA has been working on for the last decade. Game developers have a whole new suite of techniques available to create better-looking graphics. On a more personal note though. We need to really call this Hybrid raytracing as you combine the best of both worlds.