Power efficiency - Dyn Clock Adjustment

Power efficiency

Moving towards a 28nm based chip means you can do more with less as the product becomes smaller, the GK104 GPU comes with roughly 3.54 billion transistors embedded into it. The TDP (maximum board power) sits at a very respectable 170 Watts. So in one hand you have a graphics card that uses less power, yet offers more performance. That's always a big win!

The GeForce GTX 670 comes with two 6-pin power connectors to get enough current and a little spare for overclocking. This boils down as: 2x 6-pin PEG = 150W + PCIe slot = 75W is 225W available (in theory). We'll measure all that later on in the article but directly related is the following chapter.

Dynamic Clock Adjustment technology

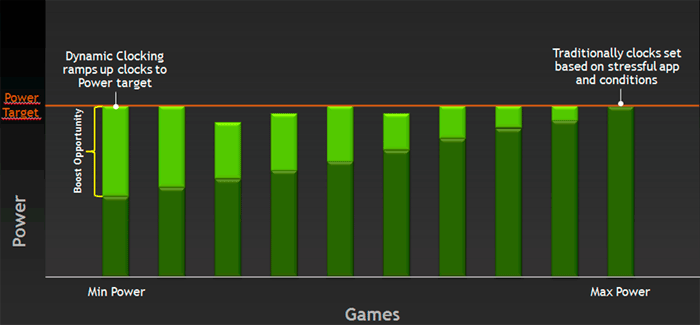

Kepler GPU will feature a Dynamic Clock Adjustment technology and we can explain it easily without any complexity.

Typically when your graphics card idles the cards clock frequency will go down... yes? Well, obviously Kepler architecture cards will do this as well, yet now it works vice versa as well. If in a game the GPU has room left for some more, it will increase the clock frequency a little and add some extra performance. You could say that the graphics card is maximizing it's available power threshold.

Even more simple, DCA resembles Intels Turbo Boost technology a little bit by automatically increasing the graphics core frequency with 5 to 10 percent when the card works bellow its rated TDP.

The baseclock of the Reference GeForce GTX 670 in 3D mode is 915 MHz, it can now boost towards 980 MHz or even higher when the power envelope allows it to do so. The 'Boost Clock' however is directly related to the maximum board power, so it might clock even higher as longs as the GPU sticks within it's power target. We've seen the GPU run to an excess of even over 1080 MHz for brief periods of time.

Overclocking on that end will work the same as GPU boost will continue to work while overclocking, it stays restricted within the TDP bracket. We'll show you that in out overclocking chapter.

3D Vision surround - 4 displays- Adaptive VSync

3D Vision surround on a Single GPU

Whether or not NVIDIA would like to admit it, ATI's Eyefinity certainly changed the way we all deal with multi-monitor setups. As a result in the series 500 products there was multi monitor support for gaming with three monitors, this is called Surround Vision. The downside here was that you needed at least two cards setup in SLI for this to work.

It would not have made any sense to have not addressed this with Kepler, so Surround vision and 3D Surround vision are now supported with one card That means you can game even in 3D on three monitors with just one GeForce GTX 680 as the new display engine can drive four monitors at once. Keplers display engine fully supports HDMI 1.4a, 4K monitors (3840x2160) and multi-stream audio.

Using up-to 4 displays

We just had a quick chat about 3D Vision surround but Kepler goes beyond three monitors. You can connect four monitors, use three for gaming and setup one top side (3+1) monitor to check your email or something desktop related.

Summing up Display support

- 3D Vision Surround running off single GPU

- Single GPU support for 4 active displays

- DisplayPort 1.2, HDMI 1.4a high speed

- 4K monitor support - full 3840x2160 at 60 Hz

In combination with new Desktop management software you can also tweak your desktop output a little like center your Window taskbar at the middle of the three monitors or maximize windows to a single display. With the software you can also setup and apply bezel correction. Interesting though is a new feature that allows you to use hotkeys to see game menu's hidden by the bezel.

PCIe Gen 3

The series 600 cards from NVIDIA all are PCI Express Gen 3 ready. This update provides a 2x faster transfer rate than the previous generation, this delivers capabilities for next generation extreme gaming solutions. So opposed to the current PCI Express slots which are at Gen 2, the PCI Express Gen 3 will have twice the available bandwidth and that is 32GB/s, improved efficiency and compatibility and as such it will offer better performance for current and next gen PCI Express cards.

To make it even more understandable, going from PCIe Gen 2 to Gen 3 doubles the bandwidth available to the add-on cards installed, from 500MB/s per lane to 1GB/s per lane. So a Gen 3 PCI Express x16 slot is capable of offering 16GB/s (or 128Gbit/s) of bandwidth in each direction. That results in 32GB/sec bi-directional bandwidth.

Adaptive Vsync

In the process of eliminating screen tearing NVIDIA will now implement adaptive VSYNC in their drivers.

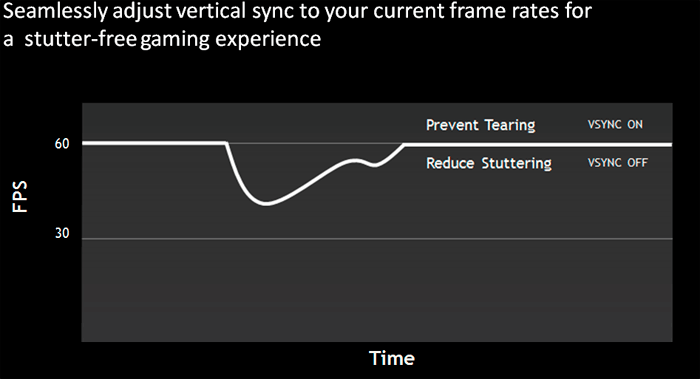

VSync is the synchronization of your graphics card and monitor's abilities to redraw the screen a number of times each second (measured in FPS or Hz). It is an unfortunate fact that if you disable VSync, your graphics card and monitor will inevitably go out of synch. Whenever your FPS exceeds the refresh rate (e.g. 120 FPS on a 60Hz screen), and that causes screen tearing. The precise visual impact of tearing differs depending on just how much your graphics card and monitor go out of sync, but usually the higher your FPS and/or the faster your movements are in a game - such as rapidly turning around - the more noticeable it becomes.

Adaptive VSYNC is going to help you out on this matter. So While normal VSYNC addresses the tearing issue when frame rates are high, VSYNC introduces another problem : stuttering. The stuttering occurs when frame rates drop below 60 frames per second causing VSYNC to revert to 30Hz and other multiples of 60 such as 20 or even 15 Hz.

To deal with this issue NVIDIA has applied a solution called Adaptive VSync. In the series 300 drivers the feature will be integrated, minimizing that stuttering effect. When the frame rate drops below 60 FPS, the adaptive VSYNC technology automatically disables VSYNC allowing frame rates to run at that natural state, effectively reducing stutter. Once framerates return to 60 FPS Adaptive VSync turns VSYNC back on to reduce tearing.

This feature will be optional in the upcoming GeForce series 300 drivers, not a default.