Page 3 (Testing)

GPU thermals

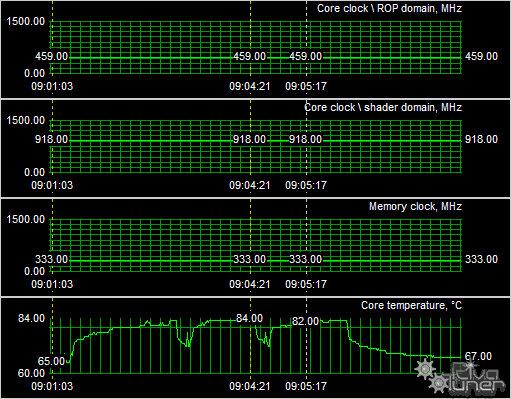

Right, testing time. First we will have a look at the thermal temperatures of the graphics core. It's a big issue these days as new hardware tends to get hotter and hotter. In the end that can have an effect on the product lifespan; yet can heat up other components in your PC which can cause instability as a worst case scenario. We measured at a room temperature (with air conditioning) at roughly 22 Degrees C with our own RivaTuner (what else is there eh?).

For the chart, temperature is displayed in Degrees Celsius: lower = better.

|

||

A fact is that the card is showing pretty high results, while idling at ~65 Degrees at full load it peaks at 85 Degrees C. The weird thing is... this card still overclocks like a junky seeking dope. For whatever reason the GPU can really cope well with this temperature.

Powah !

We'll now show you some tests we have done on overall power consumption of the PC. Looking at it from a performance versus wattage point of view, the power consumption is really not bad. Our test system consists of a Core 2 Duo X6800 Extreme Processor, the nForce 680i SLI mainboard, a passive water-cooling solution on the CPU, HD-DVD and WD Raptor drive.

The test methodology is simple: we look at the peak wattage during a 3DMark05 session with hefty IQ settings to verify power consumption. It's a good load test as both GPU and CPU are utilized really hard here. Please do understand that you are not looking at the power consumption of the graphics card, but the overall power consumption of the entire PC.

|

Videocard |

|

System Under full load |

|

GeForce 8500 GT |

|

186 |

We had a total system wattage peak at roughly 188 Watts which goes pretty much for any 8500 GT card, and which is not excessive. We simply place a wattage meter in-between the PSU and power socket. It's not the most objective way to test as you have to consider PSU efficiency as well, but it's the closest thing we can do.

My recommendations:

- A GeForce 8500 GT requires you to have a 350 Watt power supply unit; if you use it in a high-end system (high spec CPU/Memory, lot's of optical and HD's). That power supply needs to have (in total) at least 22 Amps available on the 12 volts rails.

There are many good PSU's out there, please do have a look at our many PSU reviews as we have loads of recommended PSU's for you to check out in there. What would happen if your PSU can't cope with the load?:

- bad 3D performance

- crashing games

- spontaneous reset or imminent shutdown of the PC

- freezes during gameplay

- PSU overload can cause it to break down

Noise Levels coming from the graphics card

When graphics cards produce a lot of heat, usually that heat needs to be transported away from the hot core as fast as possible. Often you'll see massive active fan solutions that can indeed get rid of the heat, yet all the fans these days make the PC a noisy son of a gun. I'm doing a little try out today with noise monitoring, so basically the test we do is extremely subjective. We bought a certified dBA meter and will start measuring how many dBA originate from the PC. Why is this subjective you ask? Well, there is always noise in the background, from the streets, from the HD, PSU fan etc etc, so this is by a mile or two not a precise measurement. You could only achieve objective measurement in a sound test chamber.

| TYPICAL SOUND LEVELS | ||

| Jet takeoff (200 feet) | 120 dBA | |

| Construction Site | 110 dBA | Intolerable |

| Shout (5 feet) | 100 dBA | |

| Heavy truck (50 feet) | 90 dBA | Very noisy |

| Urban street | 80 dBA | |

| Automobile interior | 70 dBA | Noisy |

| Normal conversation (3 feet) | 60 dBA | |

| Office, classroom | 50 dBA | Moderate |

| Living room | 40 dBA | |

| Bedroom at night | 30 dBA | Quiet |

| Broadcast studio | 20 dBA | |

| Rustling leaves | 10 dBA | Barely audible |

The human hearing system has different sensitivities at different frequencies. This means that the perception of noise is not at all equal at every frequency. Noise with significant measured levels (in dB) at high or low frequencies will not be as annoying as it would be when its energy is concentrated in the middle frequencies. In other words, the measured noise levels in dB will not reflect the actual human perception of the loudness of the noise. That's why we measure the dBa level. A specific circuit is added to the sound level meter to correct its reading in regard to this concept. This reading is the noise level in dBA. The letter A is added to indicate the correction that was made in the measurement. Frequencies below 1kHz and above 6kHz are attenuated, where as frequencies between 1kHz and 6kHz are amplified by the A weighting.

The test

We startup a benchmark and leave it running for a while. The fan rotational speed remains constant. We take the dBA meter, move away 75 CM and then aim the device at the active fan on the graphics card.

We measure roughly 42 dBa on this card. So noise wise this is pretty normal. As always I have to state that this is a very subjective test and that dBa level includes all noise in the environment.

PureVideo HD

The in April released GeForce 8600 and 8500 products will have a newly revised video engine that will help you to decode all that Blu-ray and HD-DVD madness. This engine and respective software is labeled Purevideo HD.

The video engine provides HD video playback up to resolutions of 1080p. G84 and G86 support hardware accelerated decoding of H.264 video as well. The cards also feature advanced post-processing video algorithms. Supported algorithms include spatial-temporal de-interlacing, inverse 2:2, 3:2 pull-down and 4-tap horizontal and 5-tap vertical video scaling.

PureVideo HD is is a video engine built into the GPU (this is dedicated core logic) and thus is dedicated GPU-based video processing hardware, software drivers and software-based players that accelerate decoding and enhance image quality of high definition video in the following formats: H.264, VC-1 and MPEG-2 HD.

In my opinion two key factors are a big advantage. First off to allow offloading the CPU by allowing the GPU to take over a huge sum of the workload. HDTV decoding through a VC-1 file, for example, can be very demanding for a CPU. These media files can peak to 20 Mbit/sec easily as HDTV streams offer high-resolution playback in 1280x720p or even 1920x1080p without framedrops and image quality loss. Secondly image quality enhancements like deblocking.

The engine got revised. The previous engine was already rather good. It did get a little better though as the new engine offloads another chunk of what the CPU normally does namely it now handles Bitstream processing (format of the data found in some stream of bits used in a digital communication or storage application) and a function called inverse transform as well. Unfortunately .. the biggest format being used right now is VC-1 and NVIDIA does not fully handle it's bitstream decoding.

To be able to use PureVideo HD you'll need a GeForce 8600 or 8500, PureVideo software or highly recommended the latest PowerDVD.

We have not finalized Purevideo HD results but during playback of HD content expect slightly high than 8600 GT CPU utilization and for HQV-HD expect roughly 45 points. Since the card is not HDCP capable we can not recommend it in a HTPC system.