GPUs - quality modes and VRAM usage

The graphics cards tested

In this article, we'll make use of the following cards at a properly good PC experience graphics quality wise, the quality mode as shown above with Vsync disabled. The graphics cards used in this test are:

- GeForce GTX 1660

- GeForce GTX 1070

- GeForce GTX 1080

- GeForce GTX 1080 Ti

- GeForce GTX 1660 Super

- GeForce GTX 1660 Ti

- GeForce RTX 2060

- GeForce RTX 2070

- GeForce RTX 2070 Super

- GeForce RTX 2080

- GeForce RTX 2080 Super

- GeForce RTX 2080 Ti

- GeForce RTX 3070

- GeForce RTX 3080

- GeForce RTX 3090

- Radeon RX 470

- Radeon RX 480

- Radeon RX 5500 XT (8GB)

- Radeon RX 5600 XT

- Radeon RX 570

- Radeon RX 5700

- Radeon RX 5700 XT

- Radeon RX 580

- Radeon RX 590

- Radeon RX 6800 (to be added later)

- Radeon RX 6800 XT (to be added later)

- Radeon RX Vega 56

- Radeon RX Vega 64

- Radeon VII

Image quality modes

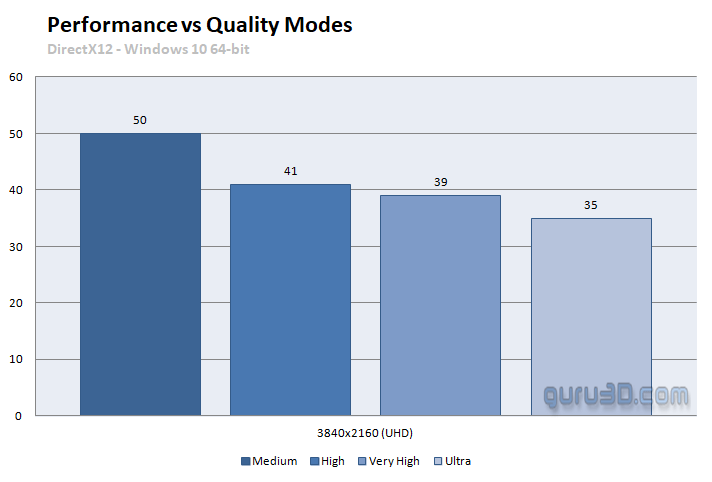

We know, as a PC gamer you want Ultra quality settings. But as it turns out, the game can be a brutal theatre to observe in the highest resolutions, and only the very best graphics cards will be able to handle it. If Ultra quality is too hefty, throttle down a notch to high-quality settings and you'll soon learn that it can really help. Below an example of FPS behavior showing the primary IQ spread. And image quality wise, you'll be hard-pressed to notice a real difference in-between High quality and ultra mode.

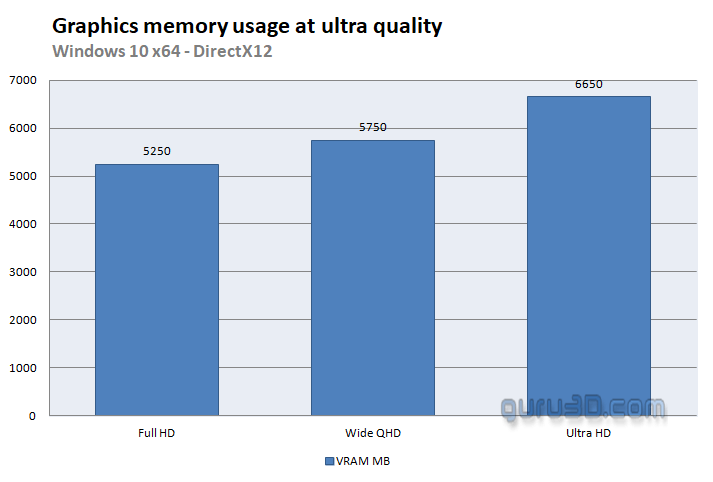

Graphics memory (VRAM) usage

How much graphics memory does the game utilize versus your monitor resolution with different graphics cards and respective VRAM sizes? Well, let's have a look at the chart below compared to the three main tested resolutions. The listed MBs used in the chart are the measured utilized graphics memory during our testing. Keep in mind; these are never absolute values. Graphics memory usage can fluctuate per game scene and activity in games. This game will consume graphics memory once you start to move around in-game; memory utilization is dynamic and can change at any time. Often the denser and more complex a scene is (entering a scene with lots of buildings or vegetation, for example) results in higher utilization. With your max quality settings, this game tries to stay at almost an 8GB threshold. At Ultra-quality settings, 6GB is the norm.

Mind you; the VRAM results are based on ultra quality in-game, not the benchmark scene. VRAM behaviors are tested with the RTX 3090 as it has 24 GB of graphics memory available.

When it comes to graphics memory, Valhalla is relatively modest - despite enhanced streaming, the significantly higher-resolution textures, and the high focus on volumetric effects and stretched LoD, Valhalla is satisfied with a graphics card starting at 6GB. With 8 GiByte you are on the safe side up to and including WQHD, this amount is sufficient even for Ultra HD. You only need more than 8 GiByte if you want to use supersampling.